Re-thinking How Humans Collaborate with Intelligent Machines

Photo

Photo

Joint action has been defined as:

“social interaction whereby two or more individuals coordinate their actions in space and time to bring about a change in the environment” (Sebanz et al., 2006).

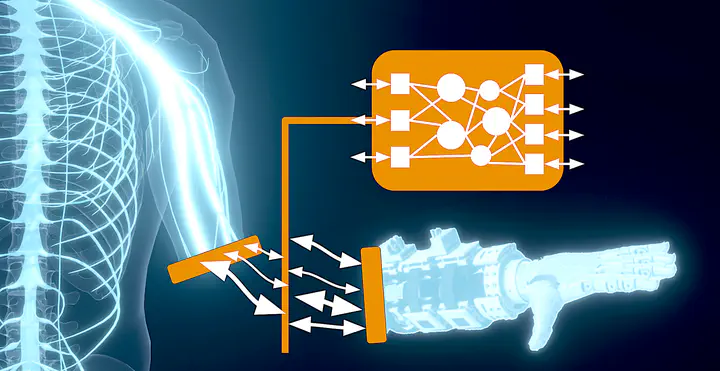

As a long-running theme of our lab’s research, we aim to understand the algorithms and architectures required for fruitful joint action between humans and machines, such that we can build machines able to represent, predict, and act (communicate) in concert with a tightly coupled human partner. We have to date developed real-time machine learning methods that allow a machine agent to represent, predict, adapt to, act upon, and give feedback on human activity and intentions while they pilot a robotic limb or other assistive technology (Edwards et al. 2016; Gunther et al. 2020; Parker et al. 2019; Sherstan et al. 2018, 2020). Our ongoing work aims to weave these concrete cases into a consistent paradigm for human interaction with assistive intelligent technology, wherein we:

- Identify and quantify the contributions that real-time prediction learning and temporal-difference learning of state and action values has to acquisition of communicative capital (Pilarski et al. 2017) between a person and a machine;

- Understand the degree to which this aligns with the acquisition and adaptation of predictive models as found in human-human joint action, and;

- Demonstrate how, by adopting a prediction-learning viewpoint on human-machine interaction, we can observe and enable emergent communication and joint action between a person and their technology that results in greater functionality for people with amputations and other physical differences during their activities of daily living (as suggested in Parker et al. 2019, Kalinowska et al. 2023).

This detailed program of study of predictive knowledge in humans and machines has led to us proposing new conceptual frameworks for human-prosthesis interaction that embrace continual learning and multiagent thinking: Communicative Capital (Pilarski 2017; Mathewson et al. 2023), joint action in prosthetic interaction (Dawson et al. in review), and Pavlovian Signalling (Pilarski et al. 2022).